In response to the SCoPEd initial consultation results, a joint letter to BACP, UKCP and BPC has been signed by the Alliance for Counselling and Psychotherapy, the National Counselling Society, Psychotherapists and Counsellors for Social Responsibility, the Psychotherapy and Counselling Union and the College of Psychoanalysts.

Dear Chairs and Chief Executives of BACP, UKCP and BPC,

The Alliance for Counselling and Psychotherapy, the National Counselling Society, Psychotherapists and Counsellors for Social Responsibility, the Psychotherapy and Counselling Union and the College of Psychoanalysts have noted your claims hailing the results of the recent consultation.

We have analysed the available statistics, and, on behalf of our combined memberships of well over 2,000 practitioners, nearly all of whom register with yourselves, respectfully beg to differ.

The results are hardly a ringing endorsement of the SCoPEd project (dramatically so, as far as BACP is concerned).

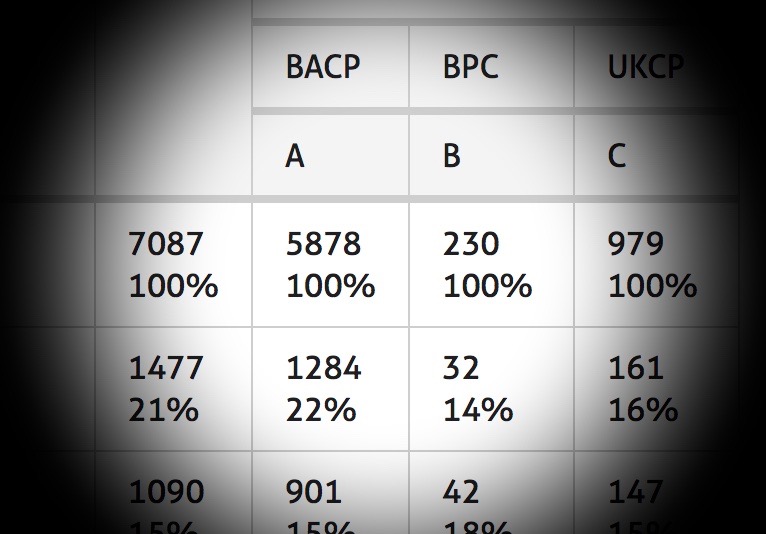

The return rates are assuredly below acceptable minima for the adoption of such wholesale change in any profession. We calculate that there is an overall return rate of the survey of around 13 per cent (7,087 respondents out of 53,500 members) – or about one in eight.

BACP’s return rate appears to be 13 per cent (5,878 respondents out of 44,000 members. (If the smaller register were used then the return rate will have been higher.)

BPC’s return rate appears to be 15 per cent (230 respondents out of 1,500 members).

And UKCP’s return rate appears to be 12 per cent (979 respondents out of 8,000 members).

Our organisations consider that it would be foolhardy to attempt to make such fundamental changes to the structure of our professions on the basis of the level of response garnered up to now. Don’t forget, it is you yourselves who have asserted that the changes will be fundamental, not only your critics. We will continue proactively to oppose any such developments.

Nor do the more detailed statistics offer you anything like the succour that you have claimed. Drilling down, we find that:

60 per cent of respondents did not believe SCoPEd would improve things for clients.

46 per cent did not believe it would help recruitment.

39 per cent did not believe it would make things clearer for trainees.

46 per cent did not believe it would help professional organisations to promote therapy.

Given that the leaderships of the three organisations so strongly supported the direction of travel of the project, these figures should make for depressing reading for you.

And among BACP members, the positive responses were even lower. Only 36 per cent of BACP respondents to the survey believe SCoPEd will make things easier for clients trying to find the right help (Question 1a). This is just 2,131 members, which is about 5 per cent of BACP’s total membership.

For comparison and to get these returns into some kind of proportion, this is 1,000 less than those, mainly but not all BACP members, who signed the petition to scrap the project.

It also contrasts fairly dramatically with the 57 per cent of BPC and 56 per cent of UKCP respondents who believe the framework would be positive for clients – an intriguing difference that is reflected throughout all the results, as laid out here.

On the question of how useful SCoPEd will be for employers (Q1b), 50 per cent of BACP respondents answered that it will be easier to establish who to employ, whereas 78 per cent of BPC and 71 per cent of UKCP respondents agreed.

On the effect on clarity for students choosing training pathways (Q1c), 57 per cent of BACP respondents were positive, compared with 84 per cent of BPC and 78 per cent of UKCP. Similarly, 50 per cent of BACP members answering the survey believed SCoPEd would make promotion of members’ skills by professional organisations easier (Q1d), whereas 75 per cent (BPC) and 73 per cent (UKCP) felt the same.

What are we to make of this? Is it surprising that organisations representing those identifying more often as ‘psychotherapists’ (and in BPC’s case, exclusively psychoanalytic psychotherapists), rather than ‘counsellors’, would favour a framework that places psychoanalytic psychotherapy at the top of a hierarchy of practice? We also note with as little cynicism as we can manage the close ties these organisations have with training programmes that would profit from such an assertion or reassertion of superiority.

Despite the deeply problematic nature of the consultation methodology, as shown in this article, and the lack of any real endorsement of the project in the results – not to mention the widespread dissatisfaction with the framework (particularly amongst ‘counsellors’ and especially the under-represented person-centred/experiential/existential/humanistic communities), as well as the substantive critiques of the political agendas and claimed ‘evidence base’ of the project – despite all this, BACP, BPC and UKCP assert nonetheless that, ‘we have an early indication that we should progress this work’.

Surely, if anything, a dispassionate viewpoint would be that there is an ‘early indication’ that the entire project is deeply flawed, and is pursuing a path that a substantial portion of the field finds at best misguided, and at worst a complete betrayal of their practices. In what sense, then, can this work be said to be happening ‘alongside our memberships’?

To progress the SCoPEd framework anywhere near ethically, it would mean reappraising every single aspect of it: its motivations and intentions, its assumptions, its methodology, its form, the composition of its ‘expert reference group’, the ‘independent’ chair, the disputed ‘evidence base’, the nature of further consultations, and so on.

Is there any will at all to do this within BACP, BPC and UKCP? The leaderships of your organisations may ‘acknowledge’ the ‘strength of feeling’ in the debates around ScoPEd, but how can they possibly continue with the project in this form, knowing the numerous substantive critiques of the project and its current functioning?

Perhaps the 3,000 consultation comments, as yet not analysed by the ‘independent research company’, hold some of the answers. Is it possible that all of these comments and all other relevant data beyond what you have released thus could be published on one of your websites? We are serious about this and consider it to be normal good practice for a consultation. Not to do so, or to refuse to release the comments, will leave you open to allegations of cooking the books.

In the meantime, the organisations sending this letter would welcome open dialogue, above all in a public format, with BACP, BPC and UKCP about the future of the therapy field.

Collegial greetings from,

The Alliance for Counselling and Psychotherapy

Psychotherapists and Counsellors for Social Responsibility

The Psychotherapy and Counselling Union

The College of Psychoanalysts

The National Counselling Society

19 March 2019: post amended to add the National Counselling Society to the letter signatories.

*

You must be logged in to post a comment.